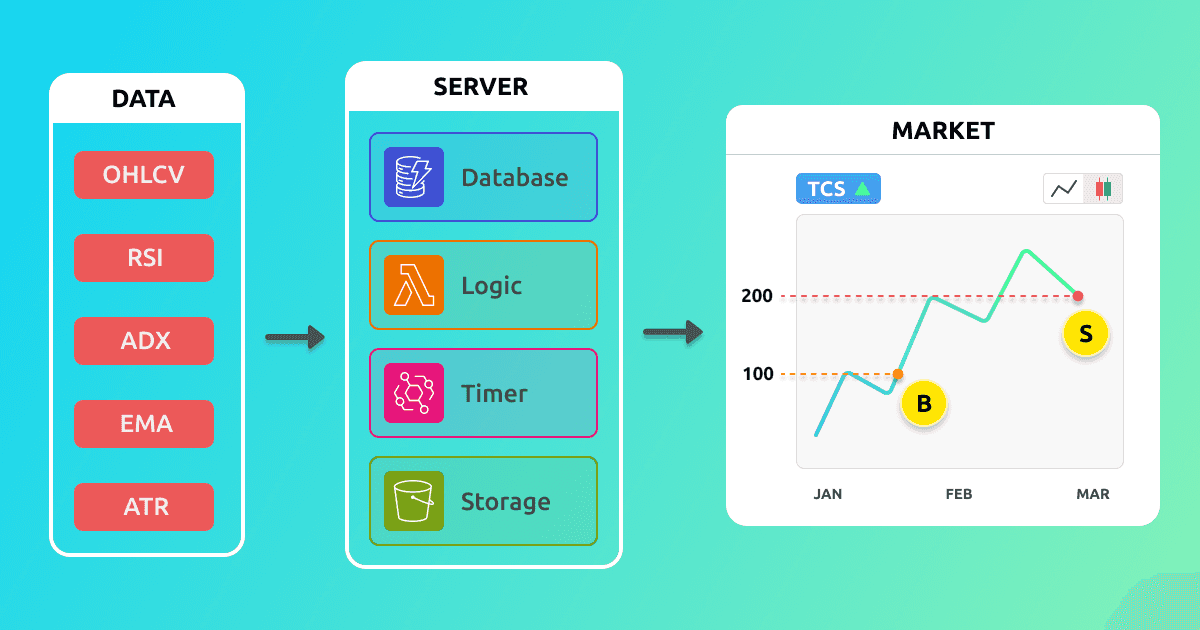

The following is a case study of my automated trading system. This post will walk through the process of building the system, the components and technologies used, how it works, and the challenges faced.

As a front-end developer, I had not yet learned how to build back-end systems. I had built a basic ExpressJS app with MongoDB a few years ago, but that was the extent of my experience. My deployment skills were limited to static front-end websites using Netlify and GitHub Pages. Working with AWS was like navigating a minefield. I spent 2 months taking Udemy courses, watching YouTube tutorials, and reading blogs to get a handle on AWS services.

Being proficient in the technical aspects of the system was not enough. I also had to have a thorough understanding of the markets. Before implementing the system, I spent many months learning technical analysis of the stock market. I did not intend to build the system while studying the markets. The idea of building the system came to me later.

The system is primarily constructed with Python. Python was selected because it is the most popular language for developing finance and data-related applications. Python is also the primary language for many data science libraries, such as Pandas, Numpy, and TA-Lib;

Pandas is used to handle large tabular datasets. Numpy is a numerical computation library that can solve large-scale mathematical problems at a faster speed. TA-Lib provides all the functions required to calculate Relative Strength Index (RSI), Moving Averages (MA), and other indicators.

All of the above libraries have a C and C++ backend, which is the main source of their speed. This speed is necessary to run calculations for hundreds of stocks, each of which has 200 or more days of historical data.

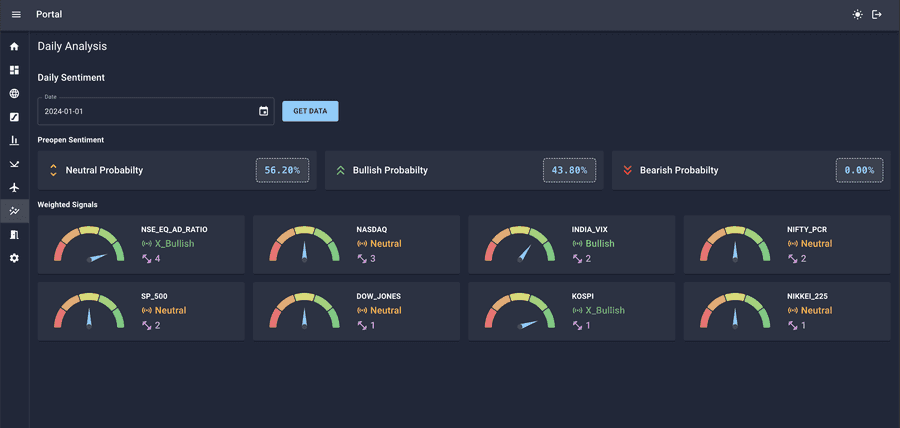

The following is a dashboard that presents data based on the trades executed by the system. This dashboard has been built using Streamlit and is hosted on Streamlit Cloud. The rationale for employing this technology is that Streamlit allows the creation of browser applications using Python. This feature enables access to various analytical libraries such as Numpy, Pandas, and Scipy that are not accessible in a Javascript environment.

You can access the hosted dashboard at Automated Trading System Dashboard, and the source code for the project is available on Github Repo.

AWS Lambda functions are comparable to microservices, which can be manually run by invoking them via API Gateway or AWS Scheduler. The objective is to divide your application into tiny bits of logic. Each function should perform a single task. For example, a Lambda function can get a day's trade signals from a database and place an order on the market by calling the broker API. If you have chosen to enable this behaviour, a Lambda function will restart if it fails or crashes.

Following is the code snippet on how to set up a lambda function using Python runtime

const saveLiquidStocksTokenFn = new lambda.Function(

this,

"SaveLiquidStocksTokenFn",

{

//can use Java, Javascript etc

runtime: lambda.Runtime.PYTHON_3_9,

handler: "save_liquid_stocks.lambda_handler",

code: lambda.Code.fromAsset("src/stock_token/fno_stocks"),

timeout: Duration.minutes(5),

// faster & cheaper, but complex setup

architecture: lambda.Architecture.ARM_64,

//common code shared between functions

layers: [pandasTaLibLayer, utilsLayer],

//pass env variable

environment: {

S3_BUCKET_NAME: csvBucket.bucketName,

SYSTEM_CONFIG_TABLE: systemConfigTableV1.tableName,

},

//more memory, more speed, more cost

memorySize: 512,

}

);Following is the skeleton code of the lambda function

import pandas as pd

import logging

import boto3

from botocore.exceptions import ClientError

import os

import requests

import send_telegram

# Access AWS resources using boto3 library

s3_client = boto3.client('s3')

dynamodb = boto3.resource('dynamodb')

# Access environment variables passed while creating lambda fn

system_config_table_name = os.environ['SYSTEM_CONFIG_TABLE']

table = dynamodb.Table(os.environ['S3_BUCKET_NAME'])

def lambda_handler(event, context):

try:

# Logic

send_telegram.t_success("Success")

except Exception as e:

send_telegram.t_error(f" Exception: ``` {e}```")

logging.error(f" Exception: {e}")S3 is a cloud-based storage service that I use to store all of my stock symbols data in CSV format. I chose S3 over DynamoDB because CSV files can be large, and I need to be able to download them in bulk. DynamoDB is not a good fit for this task because it is not designed for storing and retrieving large amounts of data.

Following is the code to set up S3 bucket

const csvBucket = new s3.Bucket(this, "Bucket", {

removalPolicy: RemovalPolicy.RETAIN,

});

This is where I store all the data about trading signals, past trades, and their PNL data.

const systemConfigTableV1 = new db.Table(this, "SystemConfigTable", {

partitionKey: {

name: "pk",

type: db.AttributeType.STRING,

},

//Both partition key and sort key make a primary key

sortKey: {

name: "sk",

type: db.AttributeType.STRING,

},

// Will not be billed for individual requests

billingMode: db.BillingMode.PROVISIONED,

removalPolicy: RemovalPolicy.RETAIN,

readCapacity: 2,

writeCapacity: 1,

});This is where the event to run the function at a particular time is created. It is similar to a cron job. This was the second most important AWS service to me as I have more than 20 cron jobs which will keep on increasing as the project grows.

Following is the code to set cron schedule

//create schedule

const tokenGeneratorFnCron = new events.Rule(

this,

"TokenGeneratorFnCronRule",

{

schedule: events.Schedule.cron({

minute: "40",

hour: "13",

weekDay: "FRI",

month: "*",

year: "*",

}),

}

);

//call the function at above schedule

tokenGeneratorFnCron.addTarget(

new targets.LambdaFunction(saveLiquidStocksTokenFn)

);This service is used to build APIs. These APIs can be linked to Lambda functions. This means I can call a Lambda function by calling an API endpoint.

Folowing is the code to set up the API Gateway

const httpApiV1 = new HttpApi(this, "Api", {

corsPreflight: {

allowHeaders: ["Authorization", "Content-Type"],

allowMethods: [

CorsHttpMethod.GET,

CorsHttpMethod.POST,

CorsHttpMethod.PUT,

CorsHttpMethod.DELETE,

],

allowOrigins: ["*"],

maxAge: Duration.days(10),

},

});

httpApiV1.addRoutes({

path: "/demo/{date}",

methods: [HttpMethod.GET],

integration: new HttpLambdaIntegration(

"DemoApi",

DemoLambdaFunction, //Lambda function to call

),

authorizer,

});Amazon Cognito provides secure access to your app's backend resources in AWS or any service behind Amazon API Gateway. I used it so that I can access my AWS services from my frontend website.

The front end is built with ReactJS, Material UI, and SWR. It is an admin portal for the system, where I can control the configurations, such as how much funds to allocate to each strategy.

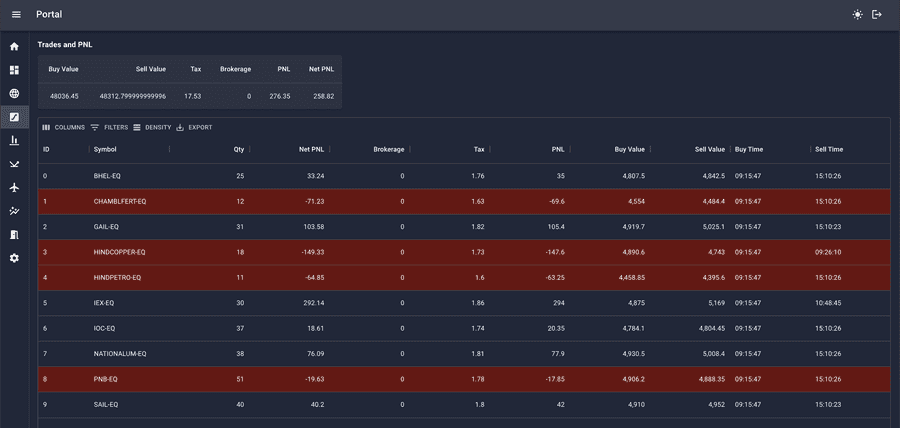

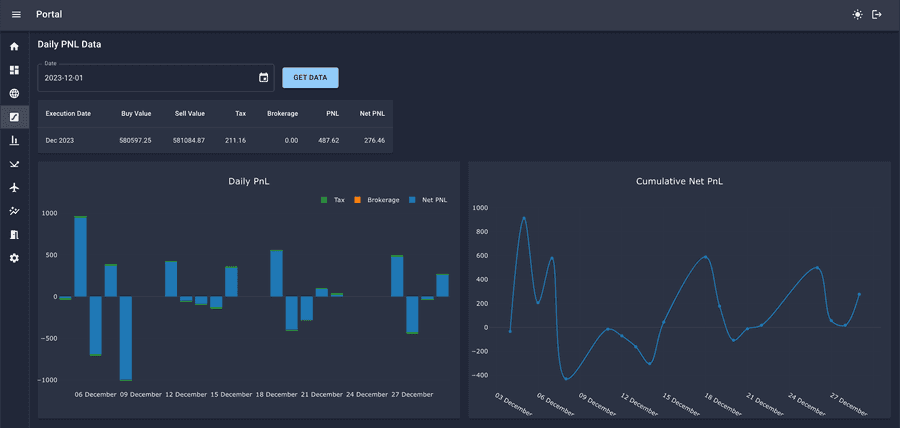

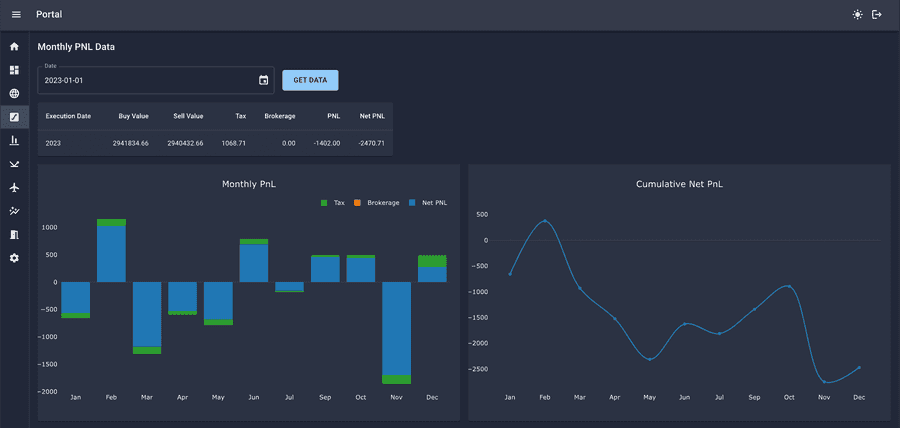

It also shows the upcoming trade signals, the past trade signals along with their PNL data. Additionally, it shows plots of daily and monthly performance and cumulative PNL. All of this data is fetched from DynamoDB through APIs built with API Gateway.

There is also an option to square off all trades during emergencies.

Following are some screenshots of the admin portal

Daily Signals

Daily Trades

Daily PNL

Monthly PNL

Daily Analysis

To reduce costs, I used AWS Serverless architecture for this project. It has a generous free tier that is appropriate for small projects. I employed AWS CDK (Cloud Development Kit) to accomplish this. It is an infrastructure-as-a-code tool like Terraform, but it is exclusively designed for AWS services. Keep in mind that AWS CDK is not limited to serverless applications. The output of this project is a Cloudformation file, which instructs AWS on how to configure all of the services.

AWS CDK can be used with Typescript, Python, and other languages. I used Typescript because of the type checking that CDK provided when combined with VS Code.

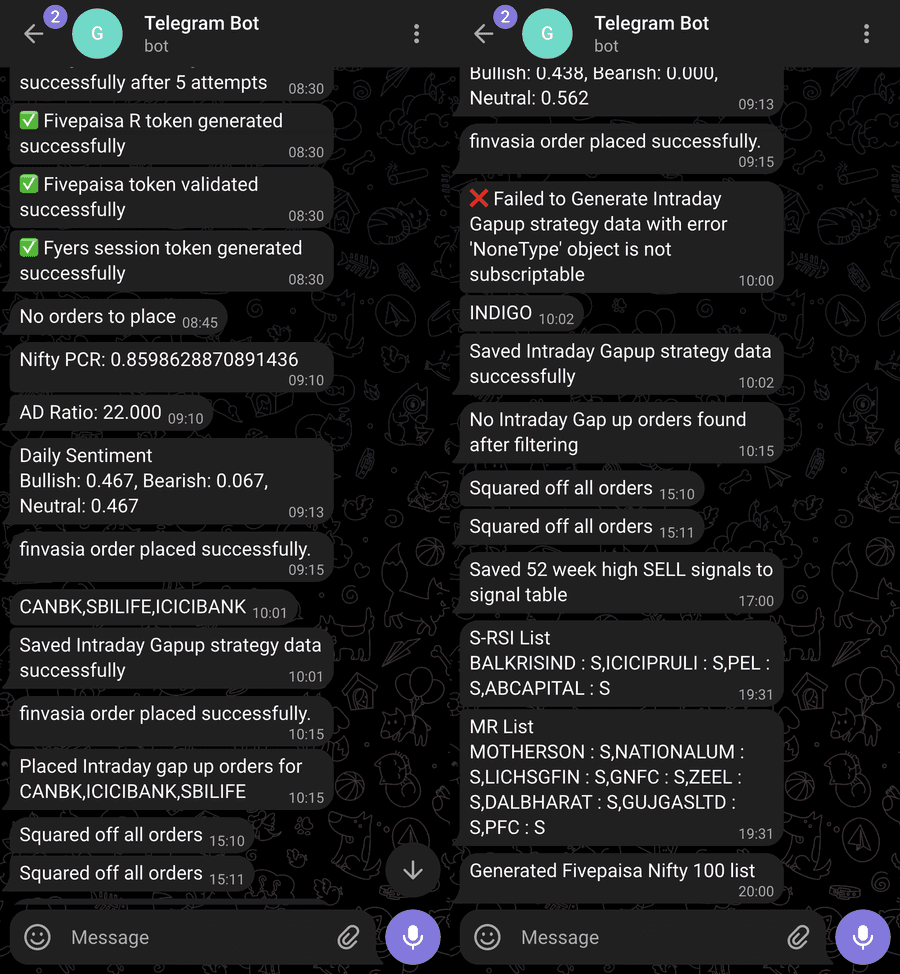

Software and apps will inevitably experience problems, errors, and crashes. The same was true for me. Because my entire app was built on the broker's API, there was a possibility of failure. I might have integrated a service like Sentry.io or Rollbar. However, I opted for a simpler solution. Telegram Bot.

All you have to do is make an API request with the content and bot token, and you'll get a notification in your Telegram app. I set up all of my success messages and errors on Telegram, along with the error code or message.

Telegram

The system is currently under development and various features are still being worked on to enhance its functionalities. As the development progresses, I will be constantly adding more strategies and refining the existing ones. At the same time, I will also be improving my engineering skills to ensure better output.